Working with on-premise systems has its benefits, but it also comes with its share of challenges. For example, system maintenance, licensing, software upgrades, among others. In this technology-driven world, organizations are moving to cloud-based platforms to minimize or eliminate the challenges faced with on-premises systems. But what about the challenges involved in moving to the cloud?

Snowflake is a leading player in the cloud data warehouses market. Since pricing is essential for any cloud technology, it is vital to have a clear and in-depth understanding of the costs involved with Snowflake before implementing a solution.

This article will go through the Snowflake pricing model with some detailed examples of what comprises the Snowflake cost. We will also share ten best practice tips that have helped Nagarro reduce the overall Snowflake cost for its clients by as much as 40%!

The Snowflake pricing model

Snowflake separates the storage and compute costs - the major components of the overall Snowflake bill. The storage cost is charged based on the average monthly storage consumption (in Dollars). The 'compute cost' is set as per the total Snowflake credits consumed.

Snowflake credit is an entity created by Snowflake to calculate the compute cost. Credits are converted into Dollars in the monthly bill.

Value for credit varies based on the Snowflake edition, cloud provider, and the region where the Snowflake account is provisioned.

Snowflake is available in the following editions:

- Standard

- Enterprise

- Business Critical

- Virtual Private Snowflake (VPS)

Its cloud providers are:

- Amazon Web Services (AWS)

- Microsoft Azure

- Google Cloud Platform (GCP)

Storage cost:

Storage costs on Snowflake can either be pre-purchased or on-demand. Both these storage types have their pros and cons. To begin with, it is recommended to go with on-demand storage for some time and then opt for pre-purchased capacity. This helps in understanding the trend of average storage consumption over a time duration.

The storage cost is calculated per Terabyte (TB) consumed. For pre-purchased capacity, the charges are an average of $23 per month per terabyte; on the other hand, on-demand charges are around $40 per per month per terabyte. This cost can vary based on the region and the cloud provider.

Compute cost:

Compute cost is calculated in the form of credits consumed by virtual warehouses to perform activities such as data loading, data processing, transformations, etc.

Virtual warehouses come with t-shirt sizes starting from extra-small (x-small), which consume 1 credit per hour charged on a minimum of 1 minute and subsequently charged per second consumption. The virtual warehouse size can be increased up to 4X-large, consuming 128 credits per hour.

Users can create virtual warehouses by selecting the respective size, the number of clusters, and the scaling policy.

Other compute costs include credits for serverless Snowflake features, such as:

- Snowpipe

- Database replication

- Automatic clustering

- Materialized views maintenance

- Search optimization service

- Automatically refreshing external table metadata

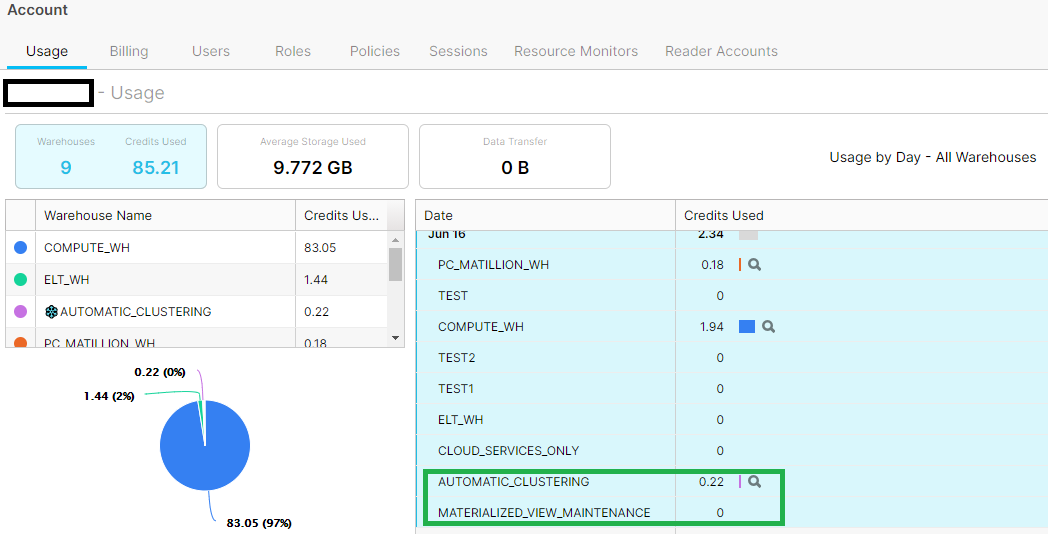

Snowflake fulfils these serverless features through managed virtual warehouses. In turn, it charges credits for the processing. It also gets added to the monthly Snowflake bill. Credits charged by Snowflake for the above serverless features can be seen under Account > Usage on the Snowflake web UI:

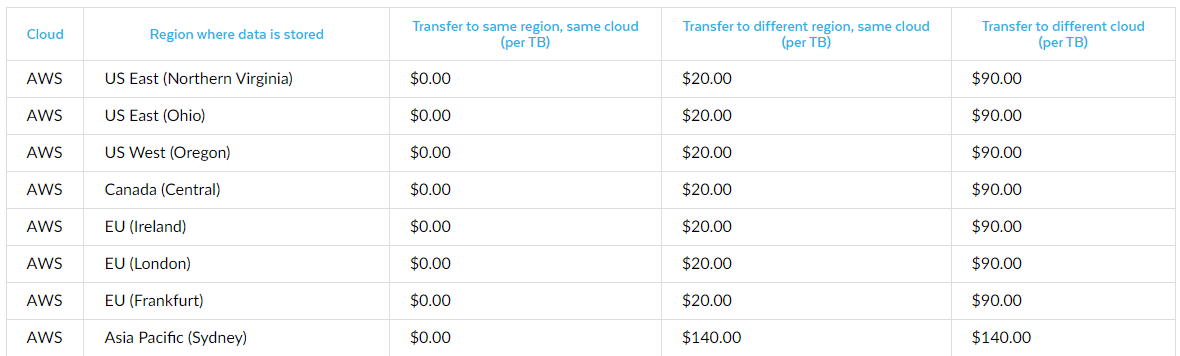

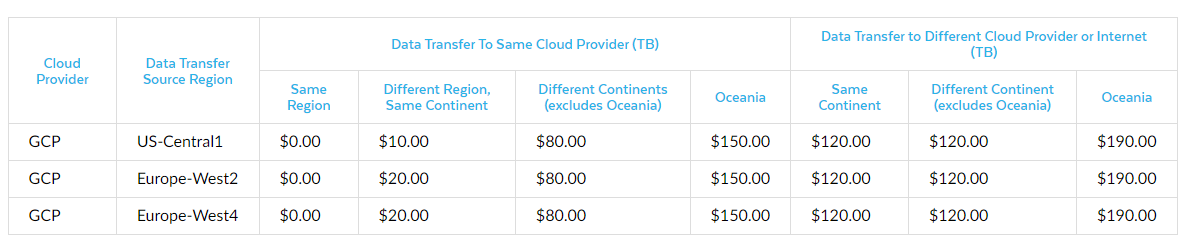

Snowflake offers data loading from cross-cloud providers and cross-region. You need not worry if your data is at a different cloud provider location. If the data is transferred from a different cloud provider's storage or from a different region, you need to pay for the data transfer and if you use features such as external tables, external functions, and data lake export. These tables show the data transfer costs incurred on transferring 1 terabyte (TB) of data:

NOTE: The above tables are taken from Snowflake's official website. They do not show the transfer costs for all AWS regions and may not be current. To view costs, refer to: https://www.snowflake.com/pricing/pricing-guide/

NOTE: The above tables are taken from Snowflake's official website. They do not show the transfer costs for all AWS regions and may not be current. To view costs, refer to: https://www.snowflake.com/pricing/pricing-guide/

Cloud service costs

The cloud services layer on Snowflake performs many tasks for its users in the background. We may not realize it but Snowflake allows us to focus more on streamlining business processes and maintaining data in the data warehouse that we have built.

Users need to pay the cost of Snowflake charges to maintain the third layer of its architecture – cloud services. This layer of Snowflake architecture is the brain of Snowflake since it performs complex tasks like query optimization, query plan creation, metadata management, infrastructure management, access control, etc.

Snowflake calculates the cost of cloud services as Snowflake credits every day. But do we pay for all the cloud services cost to Snowflake? As it turns out, the answer is "No" - Snowflake provides a discount on the cloud services cost. It is 10% of the overall credits consumed for computing on a particular day. Let's try to understand the Snowflake costs with examples:

Example 1:

Total credits consumed for compute : $50

Total credits charged for cloud services : $8

Total credits payable to Snowflake for the day : $50 + [if (8 minus 10% of $50) has a positive value]

: $50 + $3

Example 2:

Total credits consumed for compute : $100

Total credits charged for cloud services : $9

Total credits payable to Snowflake for the day : $100 + [if (9 minus 10% of $100) has a positive value]

: $100

Now, we know that Snowflake's pricing model charges the customers based on their storage and compute consumptions. Customers pay more when the best practices are not followed, and the data warehouse is set up with limited experience. This is where Nagarro has added value and helped clients reduce overall costs by leveraging best practices. Our Snowflake experts have helped reduce up to 40% of the total monthly bill in optimization projects on Snowflake for different industries, such as automotive and apparel.

So, what's the secret sauce behind how Nagarro has reduced the overall Snowflake cost? Let's find out:

10 pro tips to reduce overall Snowflake cost

![]()

1. Suspend virtual warehouse when not in use

Snowflake executes queries by using virtual warehouses (computing engines), which incur compute costs. This makes the ability to automatically suspend virtual warehouses a great Snowflake feature. Why pay for something when you are not using it?

We should not leave virtual warehouses to run idle when there are no queries to execute. It is best to auto-suspend them after certain minutes of idle time. The minimum auto suspension time users get from Snowflake's web UI is 5 minutes. But you can reduce it even further, to 1 minute, by running this SQL statement:

alter warehouse <warehouse_name> set auto_suspend = <num_in_seconds>;

2. Size virtual warehouses

A major part of the Snowflake bill consists of compute costs. Since the compute cost of a virtual warehouse depends on its size, it is best to start with an x-small virtual warehouse to run any queries. Monitor it for a few weeks before upgrading it to medium/large/other higher sizes.

3. Educate users to use the right warehouse

Snowflake users in your organization may not be following the recommended best practices when running SQL queries on their Snowflake worksheets.

Monitor users' queries (weekly or bi-weekly) to determine the trend/pattern of the queries and identify how long each query takes to complete. By understanding the types of queries they are running, educate them about the applicable best practices.

For example, if a user wants to take a glimpse at the data, they can run:

Select top 10 * from <table_name>;

Instead of running:

Select * from <table_name>;

4. Update query timeout parameter's default value

Queries on Snowflake are automatically aborted if they have been running for 172800 seconds (that's 48 hours or 2 days). In this case, Snowflake will charge for 172800 seconds to compute the query. The reason for a long-running query can be anything. For example, a user might have submitted a wrong query, which is taking a long time to execute.

Update the parameter STATEMENT_TIMEOUT_IN_SECONDS, which has the default value of 48 hours to a smaller duration, say 2 hours (or even lesser) to avoid queries that keep running for an inordinately long time.

5. Set up resource monitors

Organizations tend to have a monthly threshold or quota that they want to spend for compute on Snowflake. It is recommended to set up resource monitors for raising alerts and taking actions upon reaching the monthly credits consumption threshold. Abort all the running queries and suspend virtual warehouse immediately, complete all the running queries then suspend virtual warehouse, are some of the actions which can be taken upon reaching the credits threshold in the resource monitors. You can also set multiple thresholds, such as one threshold at 70%, the next one at 90%, and another at 100% of the monthly resource quota set on the resource monitor.

6. Avoid loading large files using the copy command

Generally, we may have a large amount of data to migrate or move to the Snowflake data warehouse. We might also be using huge CSV/semi-structured data files to copy data to the respective tables on Snowflake.

Do not load heavy files on Snowflake. Instead, it is better to break a single heavy file into multiple smaller chunks and load them using the COPY command. Snowflake divides the COPY command workload in parallel threads to load multiple files simultaneously. This requires less time in computing the virtual warehouse and means paying fewer Snowflake credits than loading a single, huge file.

7. Store data files in the same cloud provider and region

Since Snowflake supports major cloud providers such as AWS, Azure, and GCP to load data, it incurs data transfer costs when data is moved from a cloud provider, or a region that is not from the snowflake account's hosted cloud provider or region.

This makes it critical to choose a suitable cloud provider and region right when creating/provisioning the Snowflake account. To minimize data transfer costs, you must consider the region from where the data will be loaded in the long run.

8. Analyze account usage

It is essential to monitor account usage, especially query history and warehouse metering history. This helps track the credit consumption and finds out workloads that took maximum time over a period. You can then identify the patterns and areas from the query history that took longer to optimize and eliminate them.

For example, a query is scheduled to run 20 times a day in a dedicated x-small warehouse (considering the minimal workload). This query takes around 10 seconds to complete in each run. So the total average compute time for the query per day is 200 seconds.

However, Snowflake charges for a minimum of 1 minute every time the warehouse is resumed. This means that the user will pay 20*60 – in other words, the compute cost for 1200 seconds, which is six times higher than the actual consumption! Identify such patterns and take respective actions, such as changing schedules of the query, running in a shared virtual warehouse, etc.

9. Set up alerts for reader accounts

As a data provider or when data is shared with non-Snowflake customers, reader accounts are created. Consumers can execute queries on the data shared with them, but providers bear charges for their consumption.

Consumers can run any number of queries on database tables and can leave the virtual warehouse running for long. To save such avoidable costs, the data owner/provider can set data limits or account-level alerts to check the consumption of warehouses. Providers can also ensure that the virtual warehouse is configured as per their consumption requirements, i.e., as per auto-suspend, scaling policy, virtual warehouse size, and the number of clusters.

10. Use zero-copy cloning effectively

Cloning is another unique Snowflake feature that can be tapped effectively. Multiple environments (such as DEV, UAT, PROD) are created when working on a data warehousing project. If testing is required on the UAT environment that requires the latest data, the whole table/schema/database can be cloned from PROD to the UAT environment.

By cloning, only metadata is created for the cloned table, which points to the same storage as the original table. This ensures that cloning does not incur additional storage costs. The cloning feature can also fulfill other use cases where the same data is required for multiple purposes.

These tips help optimize snowflake costs and reduce the execution time of the queries to improve both the user and customer experience. We have gathered all this information from the practical experience of data warehousing and Snowflake experts at Nagarro.

Snowflake solutions at Nagarro

Nagarro offers tailormade Snowflake solutions to its clients competitively by deploying certified and experienced resources on Snowflake DWH projects.

Our Snowflake DWH team has great expertise across various cloud platforms and has implemented cloud solutions for major players in the market across domains such as automotive, energy, banking, etc. Thanks to cost optimizations, our clients could invest the saved amount in different areas, such as initiating a new Proof of Concept for a potential project, increasing their marketing spend budget, etc.

Do you want to optimize your Snowflake costs too but don't know where to start? We can help! Explore our offerings and get in touch with our experts!