Generative AI has introduced a new way to bring intelligence to every sphere. It opens myriad possibilities for business organizations in automation, knowledge management, research, software development, customer servicing, and more.

However, we are at the beginning of this AI revolution, where most organizations (barring the innovation leaders) are still identifying the relevant use cases, assessing the potential RoI, and debating the pros and cons.

At Nagarro, we believe that to understand and capture the true potential of Gen AI models or Large Language Models, we must understand how they work and know their strengths and limitations.

To go beyond the buzz and ask our AI experts to uncover the technologies behind Gen AI wonders like Chat GPT, BARD AI, and LaMDA.

In this article, Anurag Sahay, who leads AI initiatives at Nagarro, traces language models' evolution into Large Language Models (LLMs), their applications, pros, and cons.

LLMs are driving the current AI revolution, and for any enterprise to exploit its capabilities for potential gains, it is crucial to understand the composition and characteristics of LLMs.

What are language models?

Language Models (LMs) are mathematical models designed to understand, generate, and manipulate human language. They are a core component of many natural language systems used in applications ranging from machine translation and voice recognition to text summarization and chatbots.

Language models predict the likelihood of a sequence of words appearing in a text. This could be a sentence, a paragraph, or an entire document. They do this by learning the probabilities of different words or phrases following each other, allowing them to generate text statistically like the text used for training them.

Early language models and their applications

- Machine translation: Language models were integral in developing early machine translation systems. Given the sequence of words in the source language, they could predict the likelihood of a sequence of words in the target language.

- Speech recognition: In speech recognition, language models help determine the speech given a sequence of sounds or phonemes. The model could predict the most likely sequence of words that would produce the given sounds, greatly improving the accuracy of speech recognition systems.

- Information retrieval: Language models improved search algorithms by predicting the relevance of a document to a given query. The model could predict the probability of the query given the document, and this probability could rank documents.

- Spell checking and correction: Language models could predict the likelihood of a sequence of words that could identify and correct spelling mistakes. If a given word resulted in a low probability sequence but changing a single letter resulted in a high probability sequence, the model could suggest the change as a correction.

- Text generation: Though this wasn't as common in early models, language models were also used to generate text. For example, they could generate possible completions of a given sequence of words. But their outcomes were not very compelling.

Approaches to building language models

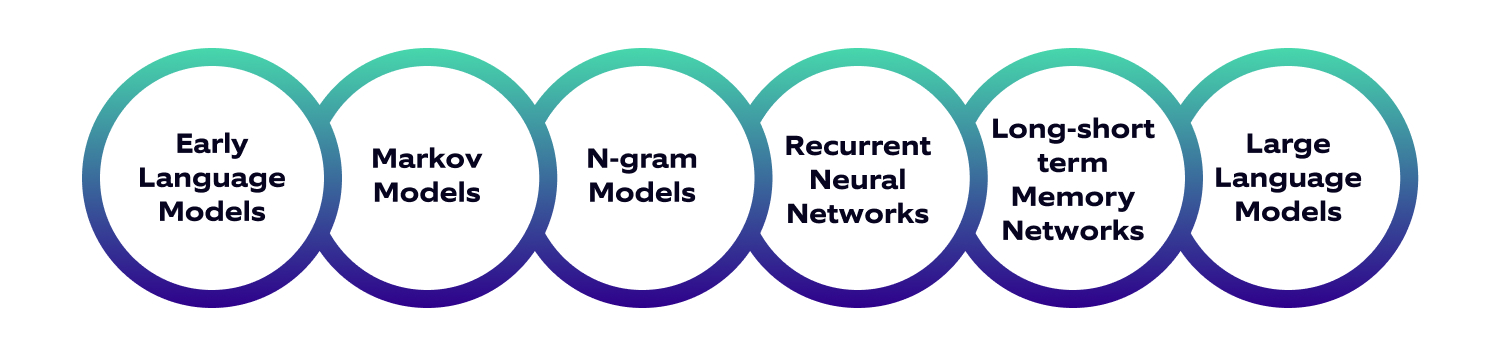

One of the earliest approaches to building language models was through Markov Models. Markov Models are statistical models based on the principle of Markov property, which assumes that the future state of a system depends only on its present state and not on the sequence of events that preceded it.

When applied to language modeling, a Markov Model considers each word as a "state." The next word in the sequence (or the next "state") is predicted solely based on the current word (or the current "state"), disregarding the sequence of words that came before it. This type of language model is known as a first-order Markov Model.

Let's consider a first-order Markov Model trained on English sentences. If the current word (state) is "dog," the model will generate the next word based on the likelihood of different words appearing after "dog" in its training data. The word "barks" might be selected as it's a commonly occurring word after "dog."

However, these first-order models can be quite limited in their predictive capacity due to their disregard for the broader context. Higher-order Markov Models can address this problem. In a second-order Markov Model, predicting the next state (word) depends on the current and the immediately preceding state. In a third-order model, it depends on the current state and the two preceding states, and so on.

The main advantage of Markov Models is their simplicity and efficiency. However, they are inherently limited in their ability to model language due to the Markov assumption, which doesn't hold for general language.

Language is heavily context-dependent, and the meaning of a word often relies on more than just a few words that immediately precede it. This is where more complex models, like neural networks, come into play, as they can model long-range dependencies in text.

N-gram models use another technique to build language models. An N-gram is a contiguous sequence of 'n' items from a sample of text or speech. In the context of language modeling, the 'items' are usually words, but they could also be characters or syllables.

For example, in the sentence "I love to play soccer," the 2-grams (or bigrams) would be: "I love," "love to," "to play," and "play soccer." The 3-grams (or trigrams) would be: "I love to, "love to play," and "to play soccer."

When used for language modeling, N-gram models assume that the probability of a word depends only on the previous 'n-1' words. This is a type of Markov assumption. For instance, a Bigram model would predict the next word based on the previous word alone. A trigram model would predict the next word based on the previous two words, and so on.

The probabilities for N-gram models are usually calculated based on the frequency counts of N-grams in the training corpus. For instance, the probability of the word "soccer" following the words "play" in a bigram model would be calculated as the frequency of the bigram "play soccer" divided by the frequency of the unigram "play" in the training corpus.

While N-gram models are simple and computationally efficient, they have some limitations. They don't account for long-term dependencies in language because they only consider a limited context window of 'n-1' words.

Moreover, they can suffer from data sparsity issues: if an N-gram isn't seen in the training data, the model won't accurately represent it. It is particularly problematic for larger values of 'n,' where the possible number of N-grams can grow exponentially.

Markov-based and N-Gram models were the earliest approaches to building language models, and many natural language use cases were attempted using these models. They were only partially successful.

The arrival of neural networks changed this significantly. Recurrent Neural Networks and Long-Short Term Memory Networks were instrumental in making this happen.

Recurrent Neural Networks

The Recurrent Neural Network (RNN) has a hidden state or memory that captures and retains things from past inputs. It processes a sequence of words one at a time, updating its hidden state at each step. It considers the current word and its memory to predict the correct next word in the sequence.

Despite their potential, vanilla RNNs suffer from a fundamental issue known as the "vanishing gradients" problem, which makes it hard for them to learn and retain long-term dependencies in the data. And predict the right things in long sequences.

Long Short-Term Memory Networks

Long-Short Term Memory Networks (LSTM) were designed to tackle the vanishing gradient problem. Along with the hidden state or the short-term memory, they have a cell state or a long-term memory that helps them remember information using gating mechanisms known as forget and output gates.

These gates help the LSTM decide what to remember and what to forget. The cell state can carry information from earlier time steps to later time steps, helping to keep important context available for when it's needed.

It makes LSTMs more effective at capturing long-range dependencies in text, such as the relationship between a pronoun and a far-off antecedent, compared to traditional RNNs.

Both RNNs and LSTMs have been superseded in many applications by Transformer-based models, which can more efficiently and effectively capture dependencies in text. Nonetheless, they were pivotal in developing neural approaches to language modeling, and they still find use in many contexts.

While RNNs and LSTMs have been very successful in modeling sequential data and overcome some of the limitations of earlier models, they have the following limitations:

- Sequential computation: RNNs and LSTMs rely on sequential computation, meaning they process inputs one after another. Therefore, the training process can be extremely slow, especially for long sequences, as later inputs must wait for the preceding inputs.

- Long-term dependencies: Even though LSTMs are specifically designed to handle long-term dependencies, they can still struggle with them, especially when sequences are extremely long. This is due to the "vanishing gradients" problem, where the contribution of information decays geometrically over time, making learning long-term dependencies challenging.

- Fixed-length context: For a specific timestep, the context that an LSTM or RNN considers for making predictions is of a fixed length, which can limit its ability to handle certain language tasks requiring a broader context.

The rise of Transformers technology

The limitations of the earlier language models necessitated an innovation known as the 'Transformers' technology that enabled:

- Parallelization: Unlike RNNs/LSTMs, Transformers do not process sequence data strictly sequentially. They use a mechanism called "self-attention" or "transformer attention" to simultaneously consider all the words in the sequence, making them much more parallelizable and faster to train.

- Capturing long-range dependencies: The self-attention mechanism in Transformers gives each word in the sequence a chance to directly influence each other, enabling the model to learn longer dependencies more effectively. A Transformer does not have to go through every intermediate step in a sequence to make predictions about related words, no matter how far apart.

- Dynamic context window: Transformers don't have a fixed context window. They assign different attention scores to different words in the sequence, allowing them to focus more on the important words and less on the less relevant ones. It gives them a "dynamic context window" to adapt to the specific task and data.

- Scalability: Transformers have proven highly scalable, achieving improved performance with increasing model size, data size, and compute power. This contrasts with RNNs and LSTMs, which need help with large sequences due to their sequential nature.

While Transformers have proven very effective and become the go-to model for many NLP tasks, they have limitations. For instance, they can be computationally intensive due to their attention mechanism and require large amounts of data for effective training.

Large Language Models (LLMs)

All this has led us to Large Language Models or LLMs. These models are trained on vast quantities of text data and have millions, or even billions, of parameters. They exploit this fundamental insight that 'scale & complexity leads to emergence.'

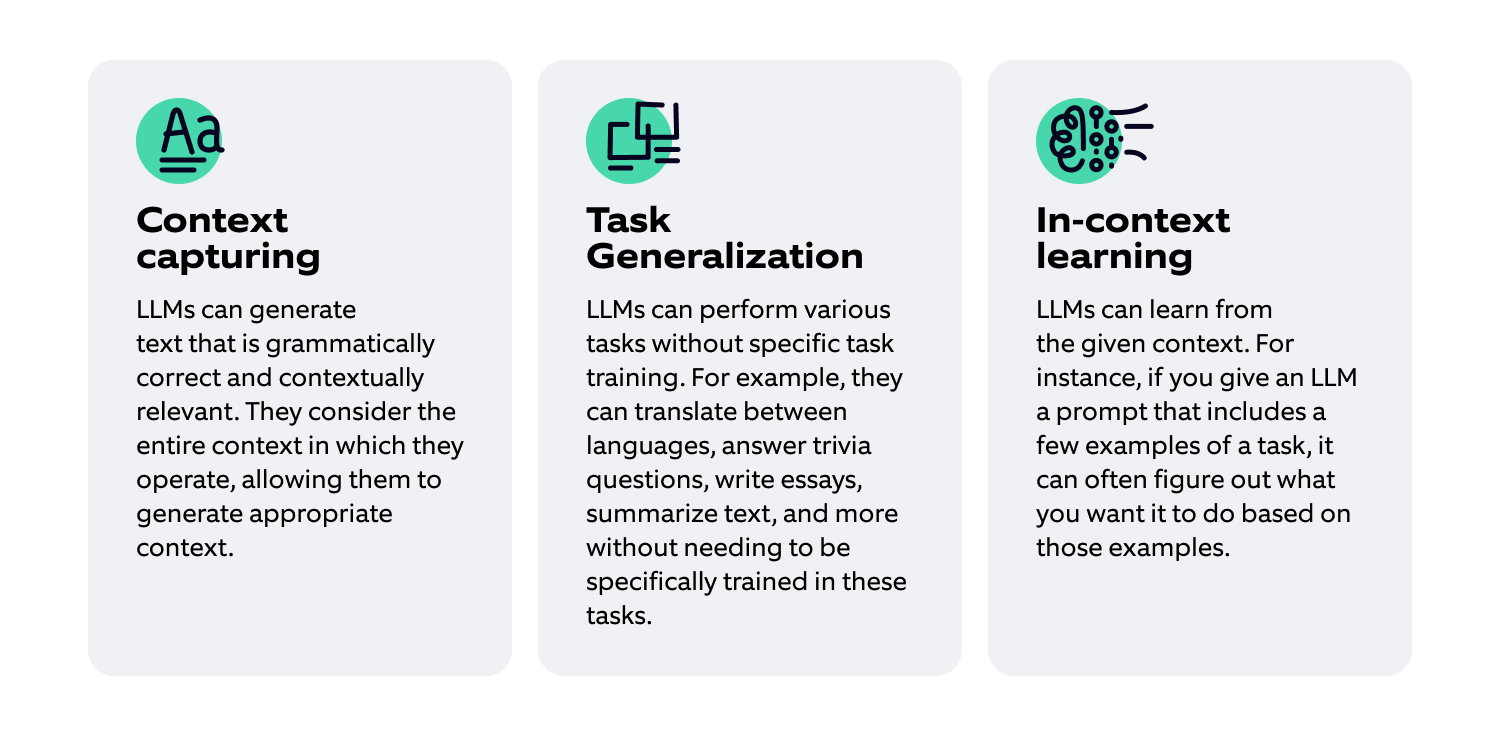

Scale in the context of LLMs refers to two main factors: the size of the model (in terms of several parameters) and the amount of data used to train the model. As models get larger and train on more data, "emergence" has been observed.

Emergence means the model begins to exhibit new behaviors or abilities it wasn't trained for. This happens because of the complexity and flexibility that comes with scale. Larger models have more parameters, allowing them to learn various patterns from the data.

An example of emergence in LLMs is zero-shot learning, where the model can perform tasks it wasn't explicitly trained for. For instance, a model might be trained on a dataset of general text from the internet.

However, it can still translate between languages, answer trivia questions, or even write poetry. It is possible because, during training, the model learns to represent and manipulate language in a way that generalizes to these tasks.

Another example of emergence is in-context learning, where the model learns to adjust its behavior based on the provided context or prompt. For instance, if you give the model a few examples of a task at inference time, it can often figure out what you want it to do based on those examples. The model was directly trained for this behavior; it emerges from the combination of the model's scale and how it was trained.

However, while emergence can lead to impressive capabilities, it can also lead to challenges. For instance, it's difficult to predict what capabilities will emerge from a given model, and these capabilities can sometimes include undesired behaviors.

Table 2: Advantages of Large Language Models

While LLMs can be incredibly powerful and versatile, they pose several challenges. For instance, it's difficult to predict what an LLM will do in a specific situation, and they can sometimes generate incorrect or inappropriate output. Controlling and understanding the behavior of LLMs is an active area of research.

Additionally, while the model can learn a lot from its training data, it's also limited by that data. If the training data has biases or errors, the model is likely to learn and reproduce those as well. It's also hard to control or interpret the behavior of very large models, which can be a problem in settings where transparency and accountability are important.

While LLMs are incredibly powerful and versatile, they also pose several challenges. For instance, it's difficult to predict exactly what a given LLM will do in a specific situation, and they can sometimes generate incorrect or inappropriate output. Consequently, comprehending and managing the behavior of these models is an ongoing focal point of research. Organizations and institutes working with these models must understand the behavior and limitations well before investing in LLMs.